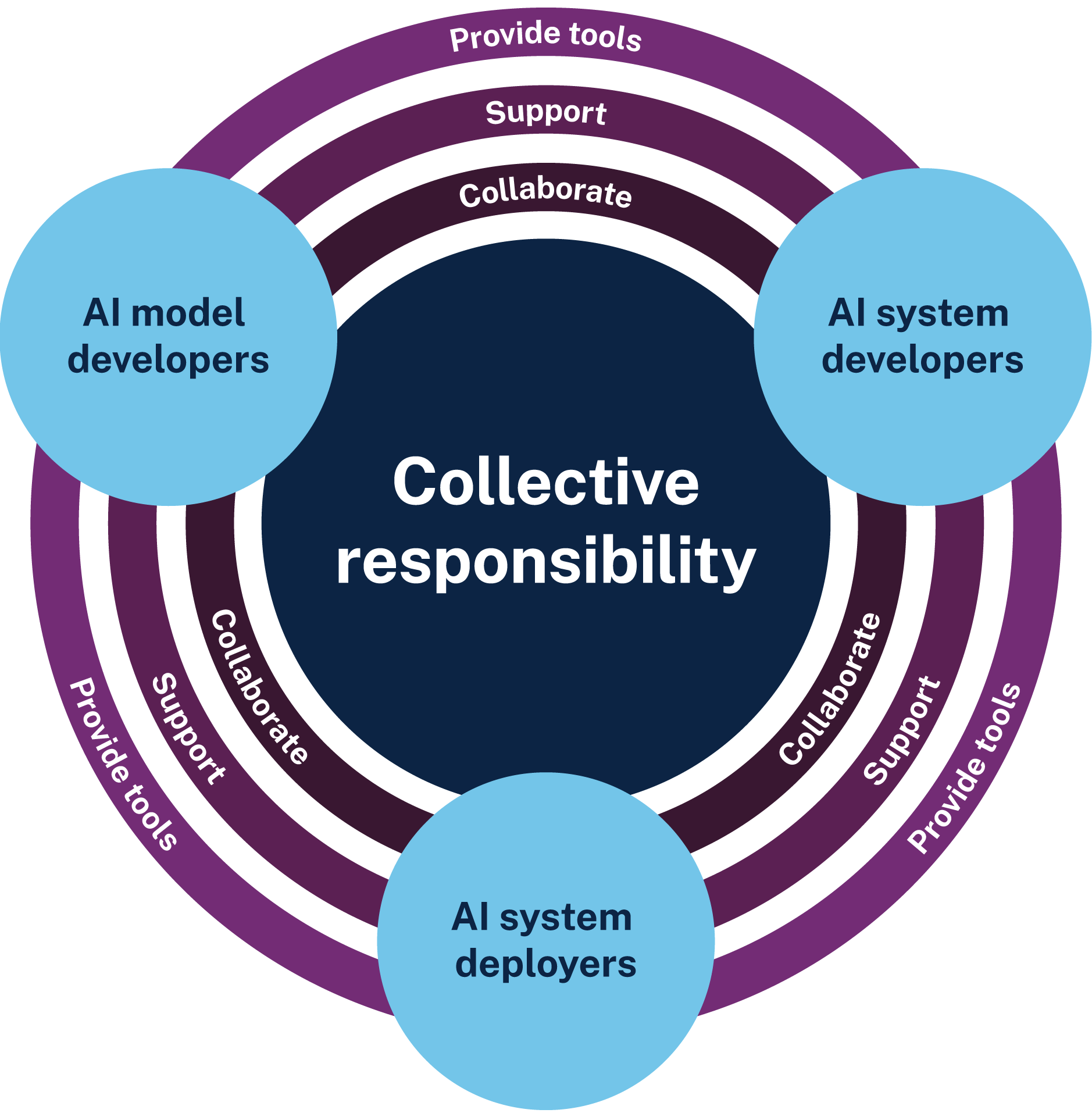

In this guidance, we talk about businesses as AI model developers, AI system developers and AI system deployers. Businesses may fall into more than one category. All 3 have a role to play in improving the transparency of AI‑generated content.

| AI system deployers | AI system developers | AI model developers |

|---|---|---|

|

Apply user-friendly labels:

Educate and verify:

|

Develop and apply system‑level tools:

|

Develop model-level tools:

Establish feedback with deployers:

|

AI system deployers

You are an AI system deployer if you are a business or person that uses an AI system to operate or to provide a product or service. This might look like:

- using chat assistants such as ChatGPT, Microsoft Copilot, Claude or Gemini to create content for use internally or externally

- using AI‑enabled image editors such as Adobe, Canva or Picsart

- giving your customers access to an AI chat assistant to answer frequently asked questions from your website

- using AI to monitor system performance

- AI‑enabled handling of user feedback

- using AI to maintain systems.

Examples of AI system deployers include Australian businesses who are using AI to improve their operations. For example, call centres who deploy AI to improve their customer support experience and minimise follow‑up calls.

Read more examples of AI system deployment in When to use transparency mechanisms.