How to assess your AI-generated content

Step 1: assess potential negative impact

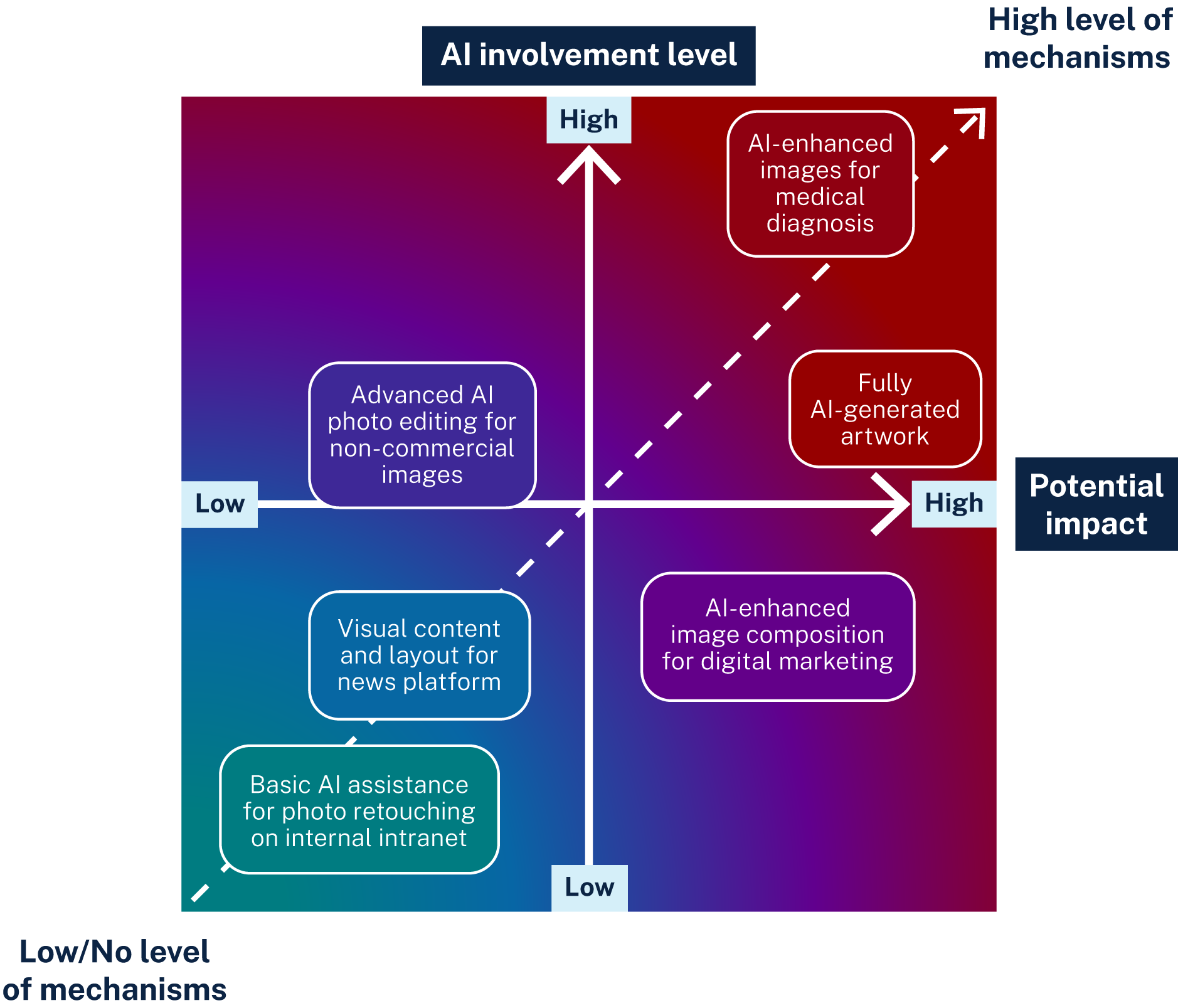

The potential impact of AI‑generated content depends on:

- its context

- how you use it

- the overall nature of your product or service more broadly.

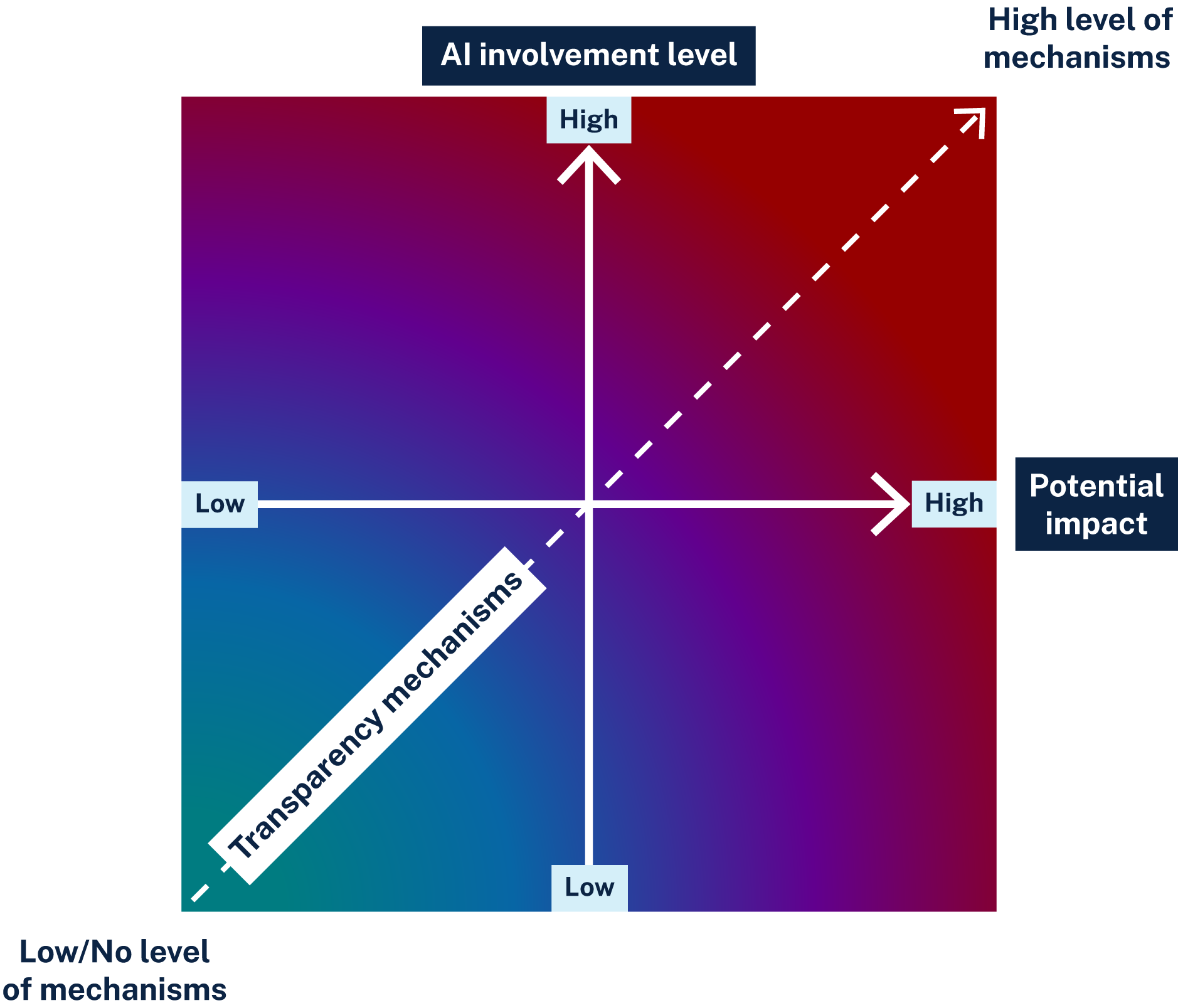

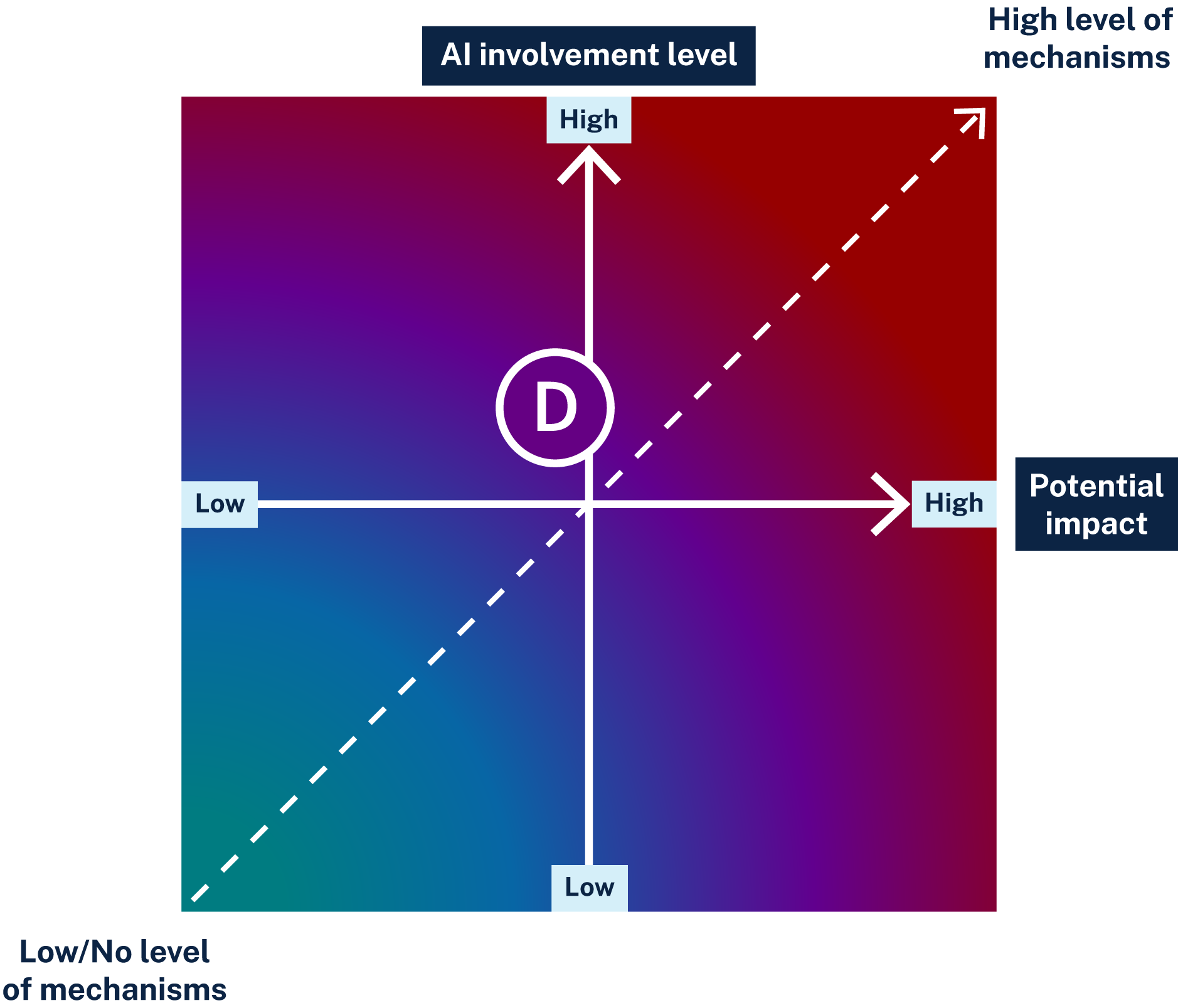

If the potential negative impact of your AI‑generated content is high, you need to use robust transparency mechanisms to make sure you can accurately communicate how you’ve used AI.

It is important to consider the potential for the content to be seen as authoritative.

For example, using AI‑generated content in a clinical setting could lead to misdiagnosis. In recruitment processes it could lead to a breach of employment law. In these circumstances, you’d need to use strong transparency mechanisms for AI‑generated content.

You should consider how AI might negatively impact different aspects of people’s lives including (though this is not exhaustive):

- people’s rights

- people’s safety

- collective cultural and societal interests, particularly First Nations peoples

- Australia’s economy

- the environment

- the rule of law

- the context in which it is shared

- impacts to the business (such as commercial, reputational impacts).

Your business may already have risk assessment tools or frameworks that you can use to assess the likely impact of your AI‑generated content. You can also refer to the principles outlined in the government’s proposals paper for introducing mandatory guardrails for AI in high‑risk settings, which may help you assess your risk levels. You may also have regulatory obligations, for example under the Online Safety Act 2021, Australian Consumer Law and the Privacy Act 1988.

Step 2: assess AI involvement level

How much AI is involved in making content affects the level of risk. When thinking about AI involvement in creating content, ask:

a) How automated is the AI system that’s generating or modifying content?

- Systems with lower levels of responsible human oversight need stronger transparency mechanisms.

- Systems where AI serves as an assistive tool with substantial human oversight may not need such strict approaches.

- Fully automated systems, where human input is minimal or absent, may require more extensive transparency mechanisms. Those with human review and oversight may require less.

b) Has AI substantially modified or generated the content?

- If an AI system has substantially modified content, the content needs more transparency mechanisms.

- What counts as a substantial modification depends on context and the needs and expectations of your users.

c) Is there potential for AI to substantially change the meaning of the content?

- Even minor changes to content can significantly change its meaning, potentially leading to users misinterpreting the content. For example, an AI editor omitting the word ‘not’ could substantially change the meaning of the content it’s editing.

- Low AI involvement can still lead to big changes in a piece of content’s meaning.

- Assess if AI has altered the meaning of your content in ways which may affect your stakeholders’ interpretation.