More about transparency

Your context and regulatory obligations (such as responsibilities under the Online Safety Act) will inform whether you need to use one or more transparency mechanisms.

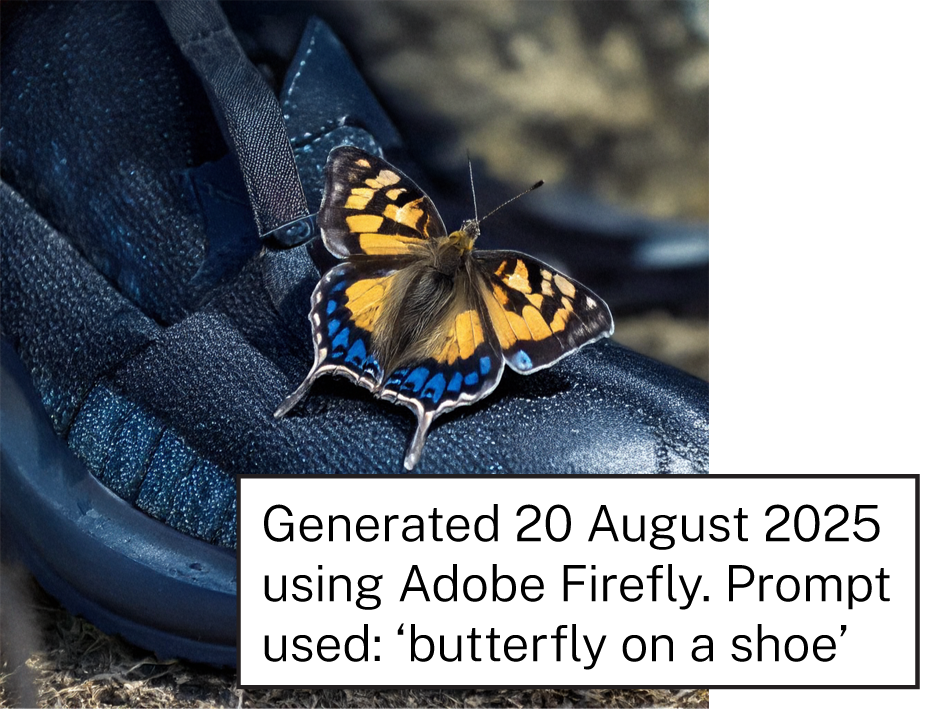

Content transparency mechanisms can help to build awareness and trust and improve accountability and safety. They can help users to distinguish AI‑generated content from human‑authored material. This can help users think critically about the accuracy of content they consume, which can reduce the risks of seriously harmful disinformation and misinformation.

Digital content transparency mechanisms are evolving. There isn’t yet a standardised approach to transparency for AI‑generated content, but AI Safety Institutes are progressing research on technical approaches. Industry‑led initiatives such as the Coalition for Content Provenance and Authenticity (C2PA) are being adopted.

Transparency mechanisms are not failsafe. They can be misused or tampered with and remain vulnerable to attack. As a business, you need to judge how to implement transparency mechanisms which best suit your context.

Read about the limitations of transparency mechanisms.