On 21 October 2025, we published the Guidance for AI Adoption, which outlines 6 essential practices for safe and responsible AI governance. This updated and simplified guidance for industry evolves the Voluntary AI Safety Standard.

We designed Australia’s first voluntary AI safety standard to help organisations develop and deploy AI systems in Australia safely and reliably. Adopting AI and automation is projected to contribute $170 billion to $600 billion of GDP. Australian organisations and the Australian economy can gain significant benefits if they can capture this (Taylor et al.).

The standard offers a set of voluntary guardrails to establish consistent practices for organisations to adopt AI in a safe and responsible way. This is in line with current and evolving legal and regulatory obligations and public expectations. While this standard applies to all organisations across the AI supply chain, this first version of the standard focuses more closely on organisations that deploy AI systems. The next version will expand on technical practices and guidance for AI developers.

While there are already examples of good AI practice in Australia, organisations need clearer guidance. By adopting this standard, organisations will be able to use AI safely and responsibly.

The standard consists of 10 voluntary guardrails that apply to all organisations across the AI supply chain. The voluntary guardrails establish consistent practice to adopt AI in a safe and responsible way. This will give certainty to all organisations about what developers and deployers of AI systems must do to comply with the guardrails.

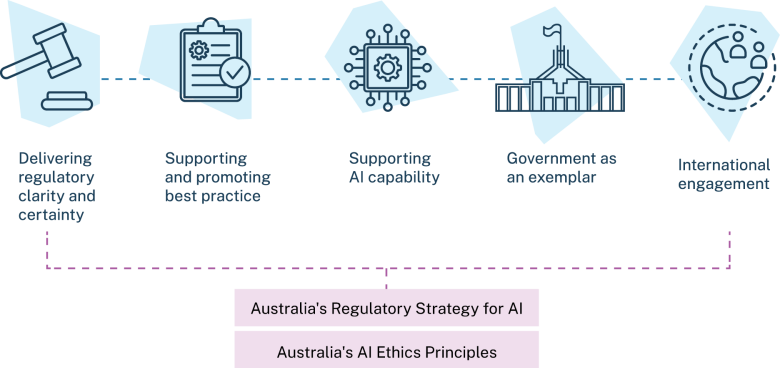

In the government’s January interim response to the Safe and Responsible AI discussion paper, the government identified actions to take. These included working with industry to develop this Voluntary AI Safety Standard. This standard sits alongside a broader suite of government actions enabling safe and responsible AI under 5 pillars, outlined in Figure 2. Actions included in the 5 pillars include the Proposals Paper for Introducing Mandatory Guardrails for AI in High-Risk Settings, the National Framework for the Assurance of Artificial Intelligence in Government and the Policy for Responsible Use of AI in Government. The standard will continue to evolve alongside the broader activities underway by government to ensure alignment and consistency for safe and responsible AI.